Every time we compute a differential $df = f_xdx+ f_ydy$, we're following a pattern that shows up so often that it's given a name (linear combination). At some point you may take a linear algebra course where you'll focus quite a bit on linear combinations, and quickly adopt matrices to help speed up the process of writing linear combinations.

Given $n$ vectors $\vec v_1, \vec v_2,\cdots,\vec v_n$ and $n$ scalars $c_1, c_2, \cdots, c_n$ the linear combination of these vectors using these scalars is the sum $$\sum_{i=1}^n c_1 \vec v_i = c_1\vec v_1+c_2\vec v_2+\cdots+c_n\vec v_n.$$ Matrix notation and products were invented to organize linear combinations into a visually appealing compact form. We place each vector in the column of a matrix, and then place the corresponding scalars into a single column vector after the matrix. The linear combination above, in matrix form, becomes the matrix product $$c_1\vec v_1+c_2\vec v_2+\cdots+c_n\vec v_n = \begin{bmatrix} \begin{pmatrix}\\\vec v_1\\ \ \end{pmatrix} &\begin{pmatrix}\\\vec v_2\\ \ \end{pmatrix} &\cdots &\begin{pmatrix}\\\vec v_n\\ \ \end{pmatrix} \end{bmatrix} \begin{pmatrix}c_1\\c_2\\\vdots\\c_n\end{pmatrix}.$$

The derivative (or total derivative) of a function is a matrix whose columns are the partial derivatives of the function. The partial derivatives we insert into the columns of the matrix in the same order in which the variables are listed for the function. Some examples follow.

- For the function $f(x)$, the derivative is $Df(x) = \begin{bmatrix}f_x\end{bmatrix} =\begin{bmatrix}\frac{df}{dx}\end{bmatrix}$, with differential $df = f_xdx$.

- For the function $f(x,y)$, the derivative is $Df(x,y) = \begin{bmatrix}f_x&f_y\end{bmatrix}$, with differential $df = f_xdx+f_ydy$.

- For the function $f(r,s,t)$, the derivative is $Df(r,s,t) = \begin{bmatrix}f_r&f_s&f_t\end{bmatrix}$, with differential $df = f_rdr+f_sds+f_tdt$.

- For the function $\vec r(u,v)$, the derivative is $D\vec r(u,v) = \begin{bmatrix}\vec r_u&\vec r_v\end{bmatrix}$, with differential $d\vec r = \vec r_udu+\vec r_vdv$.

Task 15.1

Let's practice using the definitions above. For each function below, (a) compute and label all relevant partial derivatives. Then (b) write the differential $df$ as a linear combination of the partial derivatives. Then (c) write $df$ as a matrix product. Finish by (d) stating the total derivative $Df$ of the function.

- $f(x,y)=x^2y$ [Clearly label all 4 things you were asked to find, namely (a) all partials, (b) $df$ as a linear combination, (c) $df$ as a matrix product, and (d) the derivative $Df$.]

- $f(x,y)=x^2+2xy+3y^2$

- $f(x,y,z)=3xz-x^2y$

Task 15.2

The gradient of a function $f(x,y)$ is itself a function. When we compute the partial derivatives of the gradient, we obtain vectors instead of numbers. This task has you examine the differential, partials, and derivative of the gradient of a function. We'll soon see that the derivative of the gradient is precisely the key to classifying maximums and minimums of a function.

The function $f(x,y) = x^2+3xy+2y^2$ has the gradient $\vec \nabla f = (2x+3y,3x+4y)$. This is the vector field $$\vec F = (2x+3y,3x+4y).$$

- Find the differential $d\vec F$ and write it as the linear combination $$d\vec F = \begin{pmatrix}?\\?\end{pmatrix}dx+\begin{pmatrix}?\\?\end{pmatrix}dy.$$

- Rewrite the above differential as a matrix product, so fill in the blanks below. $$d\vec F = \begin{pmatrix}?&?\\?&?\end{pmatrix}\begin{pmatrix}?\\?\end{pmatrix}.$$

- Clearly label the two partial derivatives $\frac{\partial \vec F}{\partial x}$ and $\vec F_y$.

- State the total derivative $D\vec F(x,y)$ (it should be a 2 by 2 matrix). [Note: We also write the derivative of the gradient as $D^2f(x,y)$, or $D\vec\nabla f(x,y)$, and call the resulting matrix the Hessian of $f$. Some people use the notation $\vec \nabla ^2 f$ for the Hessian, though this notation also gets use for the Laplacian $\vec \nabla \cdot (\vec \nabla f)$, which is a very different quantity.]

- The function $f(x,y) = xy^2$ has gradient $\vec F = (y^2, 2xy)$. Repeat the above to obtain the differential of $\vec F$ (as a linear combination, and in matrix form), the partials of $\vec F$, and the derivative $D\vec F(x,y)$.

Task 15.3

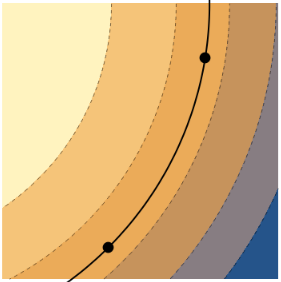

Suppose a rover moves along the level curve of a function $f(x,y)$ following the path $\vec r(t)=(x,y)$. An example of such a scenario is shown below (note that lighter colors correspond to greater outputs of $f(x,y)$. )

Label the dots $A$ and $B$ (it doesn't matter which you label $A$ or $B$). Our goal is to prove that the gradient of $f$ is normal to level curves.

- At each dot in the picture on the right, draw a vector that represents a possible option for $\ds\frac{d\vec r}{dt} = \left(\frac{dx}{dt},\frac{dy}{dt}\right)$.

- Suppose $\vec r(0)=A$ and $\vec r(1)=B$. If we know that $f(\vec r(0)) = 7$, then what is $f(\vec r(1))$? Explain.

- As the rover moves along $\vec r(t)$, how much does $f$ change? Use this to give a value for $\ds\frac{df}{dt}$?

- Explain why $\vec \nabla f$ and $\ds\frac{d\vec r}{dt}$ are orthogonal at any point along the level curve. (Hint: Add $dt$ to the denominators of the the differential $df = f_xdx+f_ydy$ , and then write the differential as a dot product. Since we are on a level curve, we know the value of $\ds\frac{df}{dt}$.)

- At point $A$, draw a vector that points in the same direction as $\vec \nabla f(A)$. Use your work above to explain why the gradient of $f$ must be normal to the level curve.

Task 15.4

The last problem for prep each day will point to relevant problems from OpenStax. Spend 30 minutes working on problems from the sections below.

- Return to any of the previous day's OpenStax problems to locate extra practice.